OpenFn's Vocabulary Mapper: Simplifying Healthcare Term Mapping

Solving Real-World Interoperability Challenges. Fragmented public health data continues to hinder effective healthcare delivery and emergency response worldwide.

Solving Real-World Interoperability Challenges

Fragmented public health data continues to hinder effective healthcare delivery and emergency response worldwide. Manual data mapping between disparate systems is not only tedious and error-prone, but also financially unsustainable at scale, creating significant barriers to timely interventions that could save lives. Recently at OpenFn, we encountered this challenge first-hand when mapping a large volume of healthcare terms from a CommCare source system to standardised LOINC clinical terms in a target system. This work was part of Indonesia’s digital reform initiatives in healthcare, driven by the fragmentation of health data and the gaps exposed during COVID-19. With tens of thousands of target terms to search through, finding the correct match requires careful comparison of similar entries and significant domain knowledge – a process that takes up healthcare and data experts’ time that could be better spent elsewhere.

Our Solution: A Human-Led AI Mapping Tool

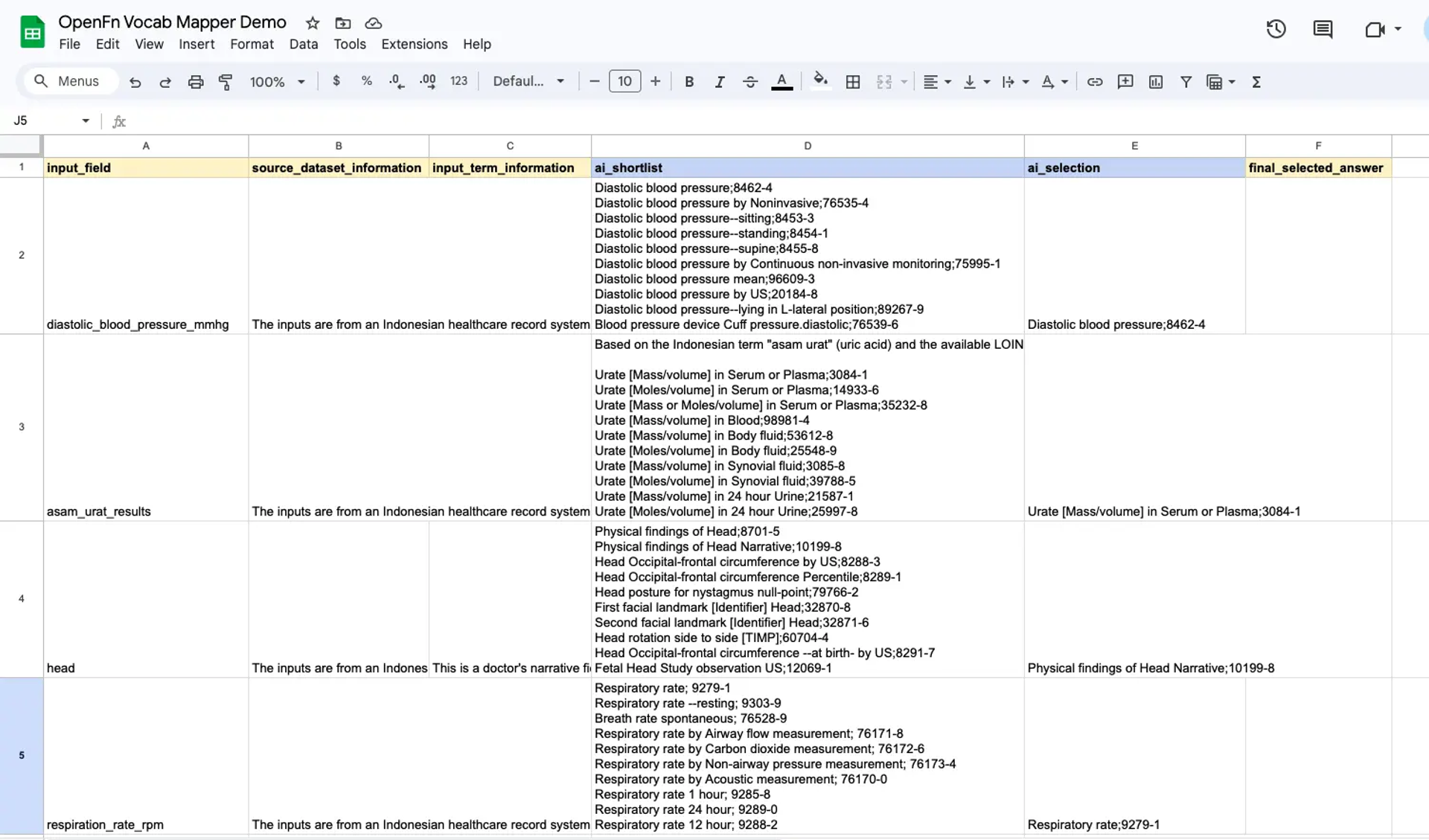

To address this challenge, we developed a vocabulary mapping tool that can be used through Google Sheets. The tool allows you to input your source terms alongside any relevant contextual information about the source dataset or individual input terms. The tool triggers an OpenFn workflow that will populate the spreadsheet with suggested target terms and codes. See a video demo here.

With this approach, users have full control and responsibility over the final mapping decisions. The tool offers AI-suggested matches alongside shortlists of potential alternatives, allowing domain experts to quickly validate or override suggestions, or add essential context and iterate on the suggestions.

Technical Implementation: How It Works Behind the Scenes

Our vocabulary mapper employs a multi-step pipeline that combines semantic and keyword search with a sequence of Large Language Model (LLM) calls. This architecture allows us to leverage an LLM's reasoning capabilities while minimising the risk of hallucinations by grounding the process in terminology databases.

The pipeline consists of the following key steps:

- Search Term Optimisation: Rather than directly using the input term for searching, we first query an LLM (currently Claude) to determine the optimal search terms based on any provided contextual information. This approach addresses two common challenges:

- Input terms often differ structurally from target terms (e.g. different naming conventions or non-English acronyms)

- The mapping task might require conceptual translation rather than direct matching (such as mapping medical conditions to related body sites)

- Dual Search Strategy: Using the optimised search terms, we perform both semantic and keyword searches against the target terminology database.

- Result Filtering: The search results are processed through another LLM call that reasons about the top candidates and selects a shortlist of the most relevant matches based on the available contextual information.

- Final Selection: A final LLM step identifies the single best match, which is presented as the primary suggestion.

While asking an LLM directly to map between terminologies would produce results riddled with hallucinations, task decomposition allows us to control for appropriate reasoning steps and constrain the results to real codes.

Currently, we've focused our experiments on LOINC as the target dataset, but the underlying pipeline is designed to be adaptable to different terminology systems. We're developing the tool to support a wider range of source terms and target datasets.

If you're dealing with similar data mapping challenges in your organisation, we'd be interested in hearing about your use case. We believe that automating this process while adhering to international standards is crucial for effective data sharing between governments, NGOs, and healthcare providers – ultimately enabling more timely interventions and improving public service delivery worldwide.

Contact us on community.openfn.org or via email to admin@openfn.org

Written by

Hanna Paasivirta